Unveiling the Power of ETL: Transforming Data into Actionable Insights

Introduction:

In the dynamic landscape of data-driven decision-making, businesses are constantly challenged by the need to integrate, transform, and harness vast amounts of data from diverse sources. ETL, an acronym for Extract, Transform, Load, emerges as a cornerstone process in this journey, facilitating the seamless flow of data from disparate origins to a unified destination. In this exploration, we delve into the intricacies of ETL, examining its fundamental principles, methodologies, significance, and the transformative impact it has on unlocking the full potential of data. Click here to read more info.

Demystifying ETL:

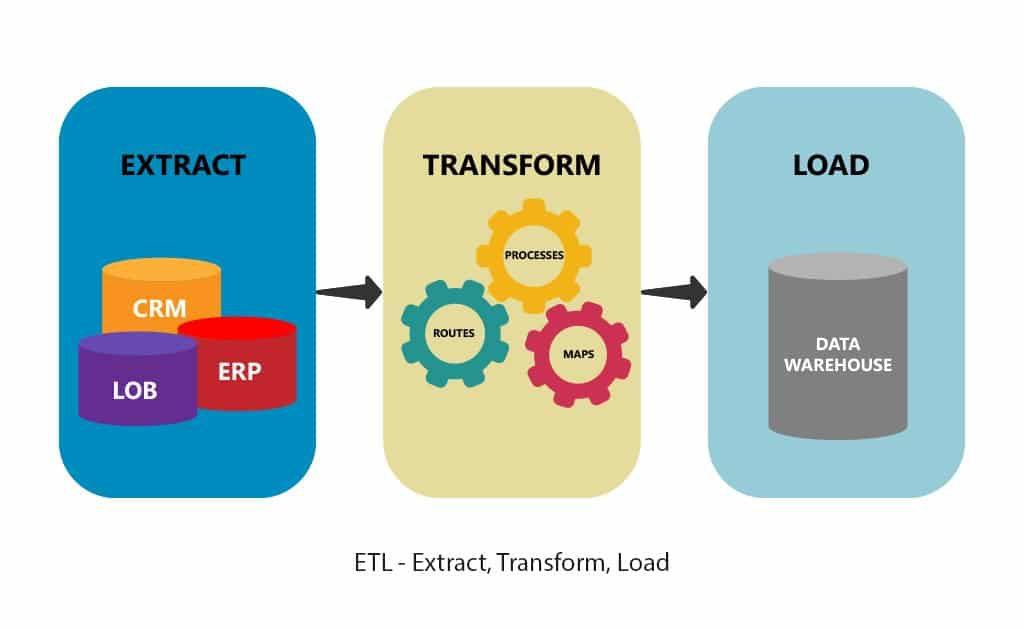

At its essence, ETL is a data integration process that involves three fundamental steps:

Extract:

The process of gathering data from various sources, which may include databases, spreadsheets, logs, or external APIs. Extraction aims to pull relevant data from source systems for further processing.

Transform:

Once extracted, the data undergoes transformation, where it is cleaned, standardized, and structured to align with the requirements of the target system or data warehouse. Transformation ensures data consistency and enhances its quality.

Load:

The transformed data is loaded into a destination system, typically a data warehouse, where it becomes part of a centralized and unified dataset. Loading involves organizing the data for efficient storage and retrieval.

The Significance of ETL:

Data Unification:

ETL plays a pivotal role in unifying data from diverse sources. By extracting, transforming, and loading data into a central repository, businesses can achieve a consolidated and comprehensive view of their operations.

Consistency and Quality:

Through the transformation phase, ETL processes clean and standardize data, ensuring consistency and quality. This step is crucial for preventing errors and inconsistencies from propagating through the analytics pipeline.

Enabling Analytics:

ETL processes pave the way for advanced analytics and business intelligence. By providing a structured and reliable dataset, organizations can derive meaningful insights, make informed decisions, and identify patterns within their data.

Regulatory Compliance:

In industries with stringent regulatory requirements, such as finance and healthcare, ETL processes ensure that data adheres to compliance standards. This is critical for maintaining transparency and trust.

Operational Efficiency:

ETL processes streamline data workflows, reducing the manual effort required for data integration. This results in increased operational efficiency, allowing organizations to focus on deriving value from their data.

Historical Analysis:

ETL processes often include the capture of historical data, enabling businesses to conduct trend analysis and understand how their metrics have evolved. This historical perspective is valuable for strategic planning.

Methods of ETL Processing:

Batch Processing: In batch processing, data is collected, processed, and loaded in predefined batches, typically during off-peak hours. This method is suitable for scenarios where real-time data updates are not critical.

Real-Time Processing:

Real-time ETL processes involve the continuous extraction, transformation, and loading of data as it becomes available. This approach is essential for industries requiring up-to-the-minute insights, such as finance or online retail.

Incremental Loading:

Instead of reloading the entire dataset each time, incremental loading involves updating only the new or modified data. This reduces processing time and resource requirements, making it more efficient for large datasets.

Components of ETL:

Data Extraction Tools:

Tools such as Apache Nifi, Talend, or Informatica PowerCenter are employed to extract data from source systems, ensuring compatibility and efficiency.

Transformation Engines:

Transformation involves cleaning, enriching, and structuring data. ETL tools like Apache Spark, Microsoft SSIS, or Python libraries such as Pandas are commonly used for this purpose.

Loading Mechanisms:

Loading data into a destination system or data warehouse is facilitated by tools like Amazon Redshift, Google BigQuery, or Snowflake. These tools enable the efficient storage and retrieval of data.

Job Scheduling and Monitoring:

ETL processes often involve complex workflows that need to be scheduled and monitored. Tools like Apache Airflow or Control-M aid in orchestrating and overseeing ETL jobs.

Metadata Management:

Proper documentation of metadata, which includes information about the source, structure, and transformations applied to the data, is critical for maintaining a clear understanding of the ETL process.

Challenges in ETL:

Data Quality Issues:

Inaccuracies, inconsistencies, and errors in source data can propagate through the ETL process, impacting the quality of the integrated dataset.

Scalability:

As data volumes grow, ensuring the scalability of ETL processes becomes crucial. Inefficient processes may lead to bottlenecks and performance issues.

Complexity:

ETL processes can become highly complex, especially in scenarios involving multiple sources, complex transformations, and intricate business logic.

Real-Time Constraints:

Achieving real-time ETL processing introduces challenges related to latency, data freshness, and the need for rapid decision-making.

Data Security:

Handling sensitive information during the ETL process requires robust security measures to protect data integrity and privacy.

Emerging Trends in ETL:

Cloud-Based ETL:

Leveraging cloud platforms for ETL processes offers scalability, flexibility, and cost-effectiveness, allowing organizations to adapt to dynamic data landscapes.

Data Lakes:

ETL processes are evolving to integrate with data lakes, enabling organizations to store and analyze vast amounts of raw, unstructured data alongside structured datasets.

Automated ETL:

The rise of automation tools and machine learning in ETL processes streamlines workflows, reduces manual effort, and enhances efficiency.

Serverless ETL:

Serverless computing models, where computing resources are automatically provisioned and scaled, are gaining traction in ETL for their cost-effectiveness and simplicity.

Applications Across Industries:

Retail:

ETL processes enable retailers to integrate data from various sources, including sales, inventory, and customer interactions, for optimized supply chain management and personalized marketing.

Healthcare:

ETL is crucial in healthcare for unifying patient records, streamlining billing processes, and facilitating analytics for improved patient care.

Finance:

In finance, ETL processes support risk management, fraud detection, and compliance by integrating data from diverse financial instruments and sources.

Manufacturing:

ETL plays a role in optimizing manufacturing processes by integrating data from production lines, supply chains, and quality control systems.

Telecommunications:

ETL is vital in telecommunications for managing network performance, customer billing, and improving service quality through integrated data analytics.

The Road Ahead:

As the landscape of data continues to evolve, ETL processes will remain a cornerstone in the quest for actionable insights. Organizations must embrace innovations, such as cloud-based solutions, automation, and real-time processing, to stay ahead in the data-driven era.

Conclusion: Transforming Data into Wisdom:

In the intricate dance of data integration, ETL emerges as the choreographer, orchestrating the seamless flow of information from diverse sources to a unified destination. The power of ETL lies not only in its ability to transform and load data but also in its role as an enabler of insight, innovation, and informed decision-making. As organizations navigate the complexities of the digital age, ETL stands as a key protagonist, transforming raw data into the wisdom that propels them forward in an increasingly data-centric world.